Claude 4 vs. Deepseek-R1 (0528)

Welcome Back to the Channel!

Claude just dropped their latest models—Claude 4 Sonnet and Claude 4 Opus—which are currently among the best coding assistants out there.

But that's not all: on May 28, Deepseek also pushed out a small update to their R1 model. It's a hotfix that mainly improves COT reasoning and coding performance.

Quick Comparison

I ran a few tests to compare Claude 3.7, Claude 4 Sonnet, and the updated Deepseek-R1 (0528). One of the most interesting tests involved a simple task:

I asked each model to generate a single HTML file that includes CSS and JavaScript to create an animated weather card.

Here's what they came up with:

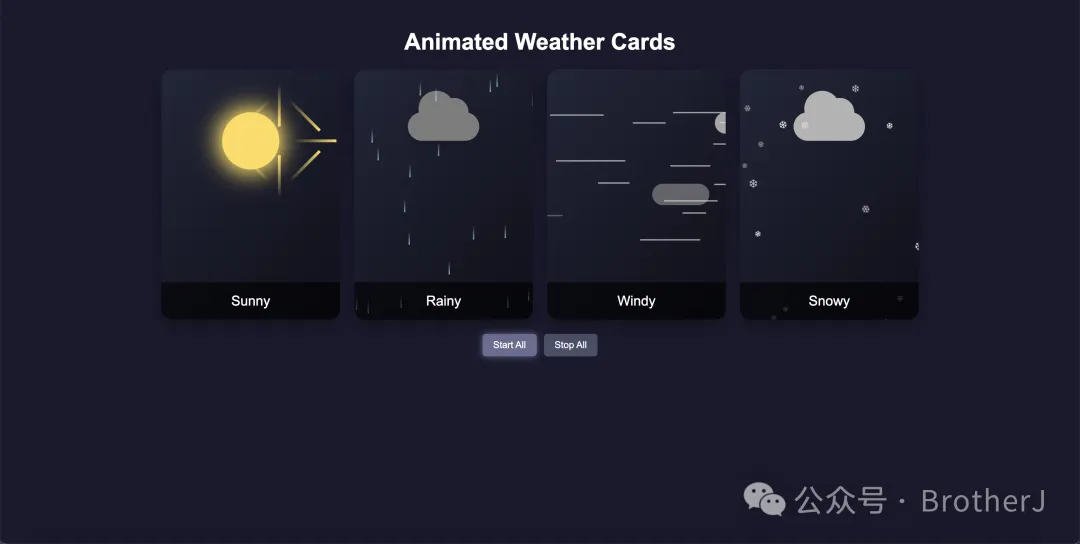

Claude 3.7 Sonnet:

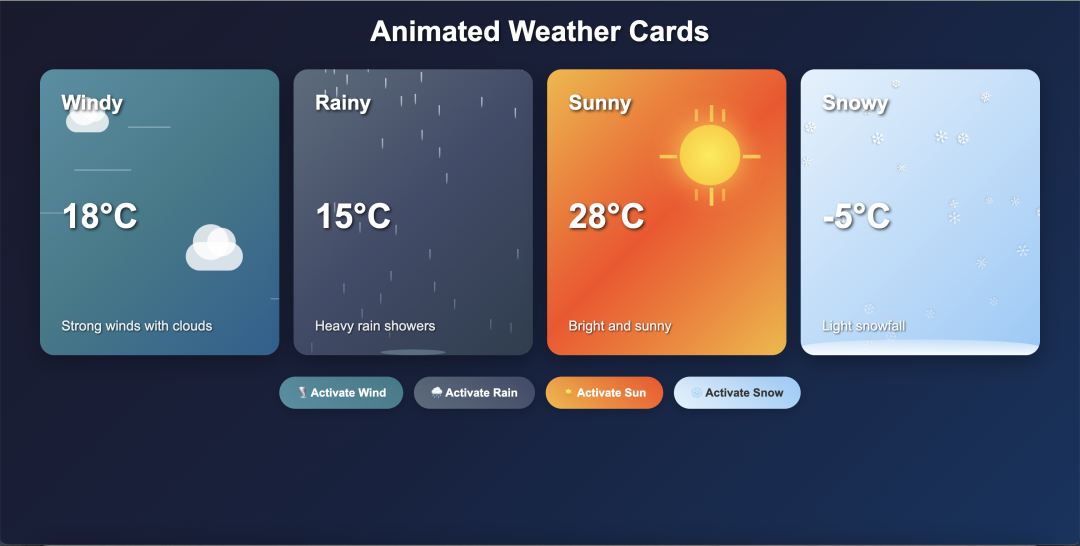

Claude 4 Sonnet:

Deepseek-R1 (0528):

Thoughts on Claude 4 Sonnet

To be honest, I'm dropping Claude 4 Sonnet first. I don't know why it went with such a flashy UI design—it feels like a step back from 3.7. I've always appreciated how 3.7 delivers clean, thoughtful designs that really impress me.

Claude 4 Sonnet might be more mainstream and easier for the general public to accept, but it's way too colorful. And those random emojis? I'm guessing that's a borrowed idea from ChatGPT. Not a fan.

So… How Good is Claude 4?

Lately, I've been seeing the same question pop up:

How good is Claude 4?

As someone who relies heavily on Cursor, I jumped in the moment Anthropic made the announcement. I:

- Skimmed through their blog post

- Looked at side-by-side comparisons between the models

- Tested the mini-bot in GitHub PRs

- Revisited earlier terminal-based coding projects

Capability

On raw capability, Sonnet 4 and Opus are top-tier—no contest. They pretty much outclass everything else right now, including GPT-4.0/O3. Especially in terms of Agent experiences, Claude 4 blows O3 out of the water.

Real-World Usage

I've been messing around with some Node.js projects, just for fun. I tested both Claude 3.7 and Claude 4 Sonnet, and the difference was clear:

- Sonnet 4 handles backend tasks much better.

- It stands out for database work.

- Code suggestions are cleaner, more readable, and generally feel more solid than 3.7.

But when it comes to:

- UI

- Layout

- Image-based reconstruction

- Design tasks

Claude 4 doesn't come close to 3.7.

Claude 3.7 surprises me with creative ideas. It doesn't just follow cookie-cutter patterns; it takes bold swings. Claude 4, in contrast, feels like it's building an overly styled UI from a decade ago—visually rich but not practical.

"Claude 4 Thinking"

I also tried both Claude 4 and Claude 4 "Thinking", and honestly, I didn't notice much of a difference.

"Thinking" feels more like it's analyzing what I'm doing rather than planning the next move.

Cost Consideration

There are already tons of UI comparison posts out there—just do a quick search and you'll find plenty.

But here's something interesting I found using Cursor:

| Model | Relative Cost |

|---|---|

| Claude 3.7 | 1x |

| Claude 4 Sonnet | 0.5x |

| Claude 4 Opus | 0.75x |

Surprisingly, Claude 4 is cheaper to use than Claude 3.7!

Problem-Solving Ability

In the past, when editing files with echoed content, Claude 3.7 would often get stuck in loops—especially when a change failed to apply correctly. It'd spiral and repeat the same terminal commands.

Claude 4 takes a smarter approach:

- Tries multiple solutions

- Uses emoji-style reactions to break the loop

- Reapplies intelligently if something fails

- Rereads context and suggests new approaches

It's genuinely impressive at resolving tricky situations.

Final Tip

Before Trae starts charging, I'd suggest trying Claude 4's Agent late at night when traffic is low.

Or better yet:

Consider getting a Cursor Pro subscription—in terms of interactivity and Agent integration, Cursor is hard to beat.

Happy coding! 👍